AI: Technology Too Important to Lose

The geopolitical and economic implications of AI are profound and multifaceted. Missing out on AI will be a critical failure of any nation.

The U.S. has recently imposed additional restrictions on AI chips and chip-making equipment, underscoring its determination to safeguard its leadership in AI technology. By essentially classifying advanced AI chips under arms export controls, the U.S. signals its view that maintaining an edge in AI is crucial. In this piece, we revisit some of our earlier research on the defense and economic advantages of AI and introduce fresh perspectives on its vital significance. (Read our previous piece for a background on the controls)

These controls were strategically crafted to address, among other concerns, the PRC’s efforts to obtain semiconductor manufacturing equipment essential to producing advanced integrated circuits needed for the next generation of advanced weapon systems, as well as high-end advanced computing semiconductors necessary to enable the development and production of technologies such as artificial intelligence (AI) used in military applications.

Advanced AI capabilities—facilitated by supercomputing, built on advanced semiconductors— present U.S. national security concerns because they can be used to improve the speed and accuracy of military decision making, planning, and logistics. They can also be used for cognitive electronic warfare, radar, signals intelligence, and jamming. These capabilities can also create concerns when they are used to support facial recognition surveillance systems for human rights violations and abuses. Commerce Strengthens Restrictions on Advanced Computing Semiconductors, Semiconductor Manufacturing Equipment, and Supercomputing Items to Countries of Concern

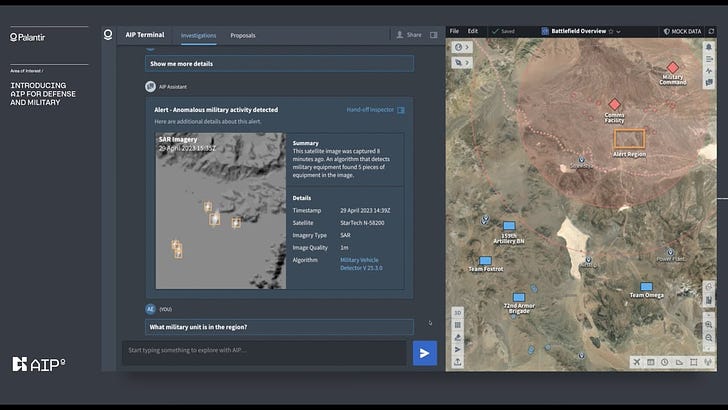

AI Creates Military Advantage

The way wars are fought has been continually evolving throughout history. From cold steel and gunpowder to tanks and jet fighters, each technological advancement has brought its own set of strategic implications. However, nothing seems to be reshaping modern warfare quite like the integration of Artificial Intelligence (AI). Based on recent analyses, AI’s involvement in war goes beyond strategy and tactics—it influences the very psychology of conflict.

A New Era of Warfare

Recent events and studies elucidate that AI's role in warfare is not just restricted to enhanced strategies or advanced tactics. It's an influencer, subtly modifying the very psyche of conflict and decision-making. This was evident in the recent skirmishes in Ukraine, where AI-empowered drones, armed with Intelligence, Surveillance, and Reconnaissance (ISR) capabilities, furnished real-time data, paving the way for informed tactical decisions. Such advancements, like the Lethal Autonomous Weapons Systems (LAWS) and Drone Swarms, are prime examples of how AI fundamentally alters combat dynamics, making traditional forms of engagement seem archaic.

Furthermore, the role of AI in cyber warfare can't be understated. Both defensively and offensively, AI algorithms can counter cyber threats or exploit vulnerabilities at unprecedented speeds. In logistics, war-gaming, electronic warfare, and even medical care on the battlefield, This isn't limited to battles alone. Training modules augmented by AI are creating a new generation of soldiers, further emphasizing the symbiotic relationship between man and machine in future combat scenarios.

‘AI can be a force multiplier, enabling security teams not only to respond faster than cyberattackers can move but also to anticipate these moves and act in advance’. Cyber AI: Real defense Deloitte report

The Push to Redefine the Metrics of Military Power

Will Roper, Former Assistant Secretary of the Air Force for Acquisition, Technology and Logistics, commented on the Pentagon’s hardware-centric mindset. Traditional metrics, like the number of ships or planes, still dominate budgetary discussions. However, as Roper pointed out, the real measure in today's world lies in the digital power of these assets. From sensors and algorithms to intelligent munitions, the "digital capabilities" are what determines a force's real strength.

“Ships, airplanes, tanks and ground troops still matter, but what matters more is their digital capabilities: sensors to detect enemy forces, algorithms to process data, networks to transmit information, command-and-control to make decisions, and intelligent munitions to strike targets. Roper said the DoD should be focusing on “decision superiority… but its not the way the budget is built” Four Battlegrounds, Paul Scharre

Transferring AI Into Military Power

Historically, the U.S. Department of Defense (DoD) was at the forefront of technological innovation, with groundbreaking advancements like GPS, microprocessors, and the early internet. This dominance in the 1960s and '70s solidified the military's role as a pacesetter in the realm of Research & Development (R&D). However, recent shifts in R&D expenditure trends suggest a significant transition. The lion's share of innovation is now emanating from the private sector, rendering the DoD more of a consumer than an instigator of cutting-edge technologies.

The implications of this transformation are twofold. On the one hand, the DoD stands to benefit from the burgeoning technological dynamism of the private sector, potentially allowing them to capitalize on innovations without the accompanying R&D expenditure. Yet, on the other hand, the DoD finds itself in uncharted waters. Without the leverage to steer the direction of technological innovation, the DoD is faced with the Herculean task of adapting and integrating these external advancements rapidly. The pressing concern is that any failure in this 'spin-in' mechanism might see the U.S. military side-lined from pivotal technological shifts, thus undermining its global supremacy.

The DoD's notorious slow pace of tech implementation, rooted in its industrial-era metrics and mindset, poses a significant challenge. In an era where the yardstick of military might is shifting from sheer numbers to digital prowess, the DoD's budgetary and operational focus requires a recalibration from waterfall to agile. For the DoD to truly harness the potential of AI and other innovations, a cultural and strategic overhaul is imperative.

However, No Single Technology is a Panacea

Reflecting upon the Gulf/Iraq Wars provides a lens to envisage AI's potential role. The technological dominance displayed by the coalition forces, chiefly the U.S., in these conflicts demonstrated the shift from traditional to technologically advanced warfare. Drawing parallels, just as precision-guided munitions and advanced communication systems were game-changers then, AI promises a similar revolution now. The essence lies in the data acquisition, cognitive advantage and unwavering decision making that AI brings, which might redefine military metrics in the future.

After the U.S. and its coalition partners achieved a swift victory in the initial stages of these conflicts, showcasing their technological and operational dominance, the nature of the war changed. As the conflict in Iraq became protracted, especially during the insurgency phase, a form of stasis set in. Local militias and insurgent groups adapted to the advanced technology and tactics of the U.S. forces. These groups leveraged asymmetric warfare, urban environments, and the element of surprise to negate the technological advantage the U.S. held. Improvised explosive devices (IEDs), guerrilla tactics, and blending into civilian populations proved that even the most technologically superior force could be challenged by adaptive and determined adversaries. The transition from a conventional war to a counter-insurgency required the U.S. and its allies to recalibrate their strategies, emphasizing the reality that pure technological power does not guarantee an enduring victory in complex terrains.

The rapid ascent of China as a technological powerhouse underscores a new era where the U.S. and its allies face a near-peer adversary that is not only catching up but, in some domains, outpacing them. Much like Japan's meteoric rise in the early 20th century, China's trajectory in the realms of AI, quantum computing, and advanced missile systems has redrawn the strategic landscape. The challenges are twofold: not only does the West have to successfully integrate and adapt to AI warfare, but it must also anticipate and counter similar or even superior capabilities from China. It's a dynamic reminiscent of the intense naval competition between the U.S. and Japan during WWII, but with an added layer of complexity. While AI has the potential to revolutionize military strategy and tactics, its ubiquity and accessibility mean that dominance is no longer guaranteed by mere adoption. The key will lie in mastery, constant innovation, and the agility to deploy AI effectively in a rapidly evolving battle environment. The stakes are higher, and the margin for error, thinner than ever before.

The Paradox of Innovation and Strategy

The role of innovation in the strategic realm is paradoxical. At face value, the longer the lifespan of a technical innovation, the better its performance should be. But this isn't always the case in strategy. Utility and performance might seem synonymous for scientists and engineers, but this equation changes dramatically when innovations are subject to strategic countermeasures.

As soon as a groundbreaking innovation emerges, adversaries will begin finding ways to neutralize or circumvent it. This reality challenges the conventional wisdom of pursuing the most optimal or elegant solutions. Instead, there's value in suboptimal but rapid solutions that give adversaries less time to react. Similarly, suboptimal but resilient innovations can weather attempts to undermine them more effectively. Technology strategy values adaptability and speed. Competition among global powers will have to strive to be adaptable, be fast, and keep the opponent guessing.

AI Creates an Economic Windfall

The transformative potential of Generative Artificial Intelligence (AI) is set to usher in an economic paradigm shift. As sectors ranging from banking to retail anticipate profound shifts in productivity and revenue, it's imperative to grasp the total addressable market (TAM) of AI. In this first section we optimistically explore the opportunities for AI's financial prospects, anchored in its automation capabilities, labor productivity growth, and its imminent role in reshaping industries globally.

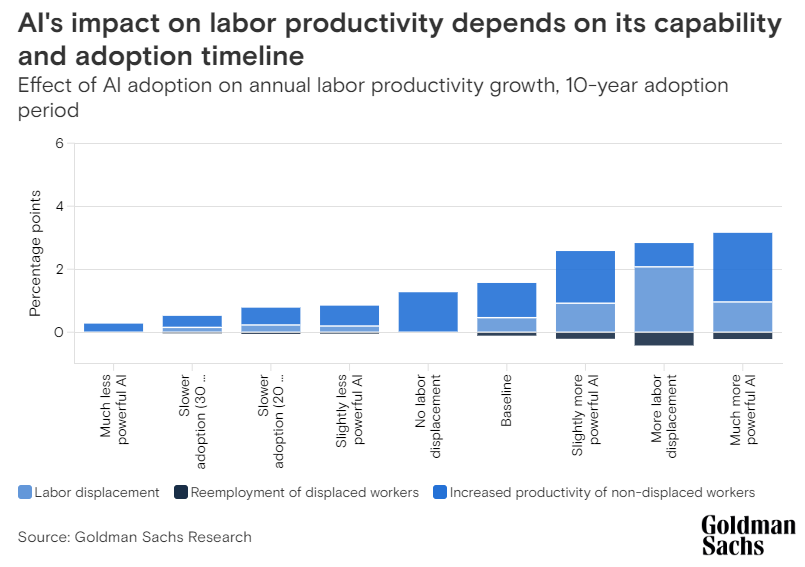

Numerous research firms are eagerly dissecting the transformative effects of Artificial Intelligence (AI). Amidst the cacophony, voices like Goldman Sachs have distinguished themselves. The numbers for generative AI are nothing short of astonishing:

Generative AI could raise annual US labor productivity growth by just under 1.5% per year over a 10-year period following widespread business adoption.

Generative AI could eventually increase annual global GDP by 7%, equal to an almost $7 trillion increase in annual global GDP over a 10-year period.

Generative AI will be disruptive to jobs: “We find that roughly two-thirds of current jobs are exposed to some degree of AI automation, and that generative AI could substitute up to one-fourth of current work.”

AI investment could approach 1% of US GDP by 2030 if it increases at the pace of software investment in the 1990s. (That said, US and global private investment in AI totaled $53 billion and $94 billion in 2021, a fivefold increase in real terms from five years prior.)

McKinsey produced a report on generative AI's potential impact, summarized in bullet points:

Generative AI's potential economic contribution ranges from $2.6 trillion to $4.4 trillion annually.

Four main sectors to benefit: customer ops, marketing and sales, software engineering, and R&D.

Banking could see gains of $200 billion to $340 billion yearly; retail may benefit by $400 billion to $660 billion annually.

Generative AI can automate tasks that occupy up to 60-70% of workers' current time.

Half of today's work could be automated between 2030 and 2060 due to generative AI.

Labor productivity might grow by 0.1% to 0.6% yearly until 2040.

Combined with all tech, productivity could rise by 0.2% to 3.3% annually.

The Low-Hanging Fruits: Information-Based Systems

In the world of AI, the first to reap rewards are often the systems that rely primarily on information processing, without the need for complex real-world interactions. Examples include chatbots, recommendation systems, or financial forecasting tools. Here, the I/O is streamlined: data is readily available in digital form, and the output is typically a digital action, like sending a message or recommending a song. The economic advantages are clear. The real complexity emerges when AI attempts to interact with the physical world, such as driving a car, controlling a drone, or performing a surgical procedure. This interaction demands not just data processing but also the accurate collection of real-time data and the ability to act on that data through various actuators. Information-based AI startups might offer quicker returns given their ease of integration and scalability. While real-world AI applications represent a longer-term bet, with potentially higher returns, but accompanied by increased risks and longer maturation periods.

If You Can Pay People $5/hour Why Would You Adopt AI?

Adoption is also likely to be faster in developed countries, where wages are higher and thus the economic feasibility of adopting automation occurs earlier. Even if the potential for technology to automate a particular work activity is high, the costs required to do so have to be compared with the cost of human wages. In countries such as China, India, and Mexico, where wage rates are lower, automation adoption is modelled to arrive more slowly than in higher-wage countries.

Symbiotic Military-Commercial Development

The symbiotic potential between the Department of Defense (DoD) and corporate giants, presents an exciting future canvas for technological advancements. Historically, as with the development of the internet and GPS, military innovations have often been groundbreaking and well-resourced. If the Department of Defense were to pass down these advancements to the commercial realm, we could see a revolutionary leap in civilian tech, with Tesla's vehicles or Boeing's aircrafts benefiting from defense-grade AI and navigation systems. This becomes all the more compelling when considering the DoD's appetite for tackling long-term investments and complex challenges like real world AI implementations, areas where the private sector often exercises caution due to associated risks. Through this partnership, the DoD provides the rigorous research and development foundation, while companies like Tesla and Boeing provide scalability and widespread application.

AI Matchup: Contested Leadership

Both McKinsey and Goldman Sachs present a compelling picture of its transformative potential, especially the productivity boost to developed economies. While predicting a precise 10-year trajectory remains elusive, their projections suggest an impact to the tune of trillions on the global economy, serving as a valuable base case. These figures could swing higher or lower depending on a myriad of factors. Beyond numbers, the geopolitical implications of AI's ascendancy are profound and multifaceted. But it is clear that missing out on AI will be a critical failure of any nation, exemplified by the US effectively elevating advanced AI chips to similar controls as military weapons. We explore the ripple effects of these dynamics in the next newsletter.

In evaluating global AI leadership, there is a quadrant of vital elements: Data, Algorithms, Hardware, and Talent. Data drives AI, and as more devices gather it, AI systems sharpen their accuracy. Algorithms transform this data into meaningful insights, while high-powered hardware ensures processing. Yet, these systems are only as potent as the human experts behind them, underscoring the competition for top AI talent. Algorithms are universal and easily replicated, so the edge often lies in proprietary data and advanced hardware. Nonetheless, the bridge between these technical facets and real-world utility is human expertise, and the institutions that channel these assets into tangible applications play a decisive role in AI leadership.

The current AI landscape presents a nuanced picture. While the U.S. publishes around 30% more papers than China, the latter is rapidly narrowing this academic chasm. However the US produces 70% of the most cited papers worldwide, while China only produces 20%. Remarkably, when considering publications in the CNKI (China National Knowledge Infrastructure, a leading Chinese academic database), China's output is a whopping five times that of the U.S.

However, there's a significant shift in the AI sector's dynamics. Before 2010, academic institutions spearheaded 80-100% of large-scale AI outcomes. By 2020, this plummeted to below 10%, with startups and private enterprises wielding commercially-driven agendas stepping into prominence. Analyzing the startup ecosystem, the U.S. has 292 AI "unicorns" (startups valued over $1 billion) with a cumulative worth of $4.6 trillion. In contrast, China boasts 69 such firms, valued at $1.4 trillion. Thus, while China's academic contribution is vast, its commercial translation lags behind the U.S. ecosystem. Expert consensus suggests China's Large Language Models (LLMs) trail by roughly two years.

The breadth and depth of tech available to the U.S. DoD courtesy of its private sector necessitates integration for defense applicability. The People's Liberation Army (PLA) starts with a weaker commercial foundation. Yet, the malleability of China's state-centric economic approach could funnel resources to projects that are identified as important. The U.S. holds the upper hand in algorithms, and notably, hardware. But, China's relentless pursuits in hardware, talent development and the transferability of algorithms make the U.S. advantages marginal. However if dramatic changes were executed on the U.S. hardware control regulations, this gap would become far more pronounced. This begs that question: Can China underpin its AI trajectory by ensuring access to top-tier hardware?

China is not ‘Ahead’ Regulating Generative AI

A stark distinction between the U.S. and China is evident in China's LLM approval mechanism; overseen by the Cyber Security Administration, it mandates model-specific evaluations. This translates to formidable barriers for public-facing, consumer-centric solutions, like chatbots. Conversely, business-focused applications, such as corporate productivity tools, may encounter a more lenient regulatory environment. Further compounding the issue, there's a discernible hesitance among Chinese consumers towards SaaS subscription models. However, this doesn't preclude the potential for military innovations within China.

China's new domestic generative AI legislation raised questions about its integration with the existing censorship system. However, a recent draft by the National Information Security Standardization Technical Committee (TC260) provided clarity on the regulation of AI-generated content. This draft provides explicit guidelines for AI model training and moderation, addressing both universal concerns like algorithm biases and China-specific content.

Key provisions include:

Training: AI models use diverse corpora (text and image databases) for training. TC260 asks companies to diversify these databases and evaluate their quality. Any source with over 5% "illegal and negative information" should be excluded from training.

Moderation: AI firms are urged to employ moderators who can enhance content quality per national policies and external complaints. Considering that content moderation is already a significant workforce component in companies like ByteDance, human-driven censorship is expected to grow in the AI era.

Prohibited Content: Companies are instructed to identify keywords indicating unsafe or banned content. Political content against "core socialist values" and "discriminative" content, such as religious or gender-based biases, fall under this scope. AI models must then undergo tests to ensure less than 10% of their outputs breach these rules.

Subtle Censorship: While the standards focus on implementing censorship, they also advocate against overt censorship. For instance, rather than flatly rejecting prompts containing "Xi Jinping," AI should be calibrated to handle questions about the Chinese political system without blatant evasion.

These guidelines are non-binding recommendations but are expected to shape future regulations. Large tech firms in China, like Huawei and Alibaba, have influenced these standards, indicating the industry's aspirations concerning regulation.

In summary, as global AI regulation discussions evolve, China's approach provides insights into potential content moderation methods and possible AI-era censorship strategies. However the rapid progress in regulation in China is not a gold standard for AI safety but an attempt to control the technology within the acceptable bounds of Chinese propaganda.

US Opportunity Adventurism is Unwavering

There's gold in them thar hills!

Regulation: it’s a term that has shaped the trajectory of numerous industries, particularly in the realm of emerging technologies. While many stakeholders argue that regulatory clarity is paramount for innovation, a retrospective look at sectors like cryptocurrency presents a strong counter-narrative.

Innovation Comes Before Regulation

Cryptocurrency, in its infancy, thrived amidst the mist of regulatory uncertainty. From Bitcoin's initiation in 2009 to the explosive proliferation of altcoins, the crypto space was characterized by rapid growth and dynamism, often unencumbered by clear regulatory guidelines. For many innovators, the lack of definitive regulations didn't deter them; instead, it provided an expansive playground where boundaries were yet to be drawn. The message was clear: innovation doesn’t necessitate regulation.

"We want to give the industry time to evolve. We want to give it time to mature. But we also need to protect investors and consumers." Gary Gensler November 2022

AI Investors Will do it for the TAM

Fast forward to the current digital landscape, where Artificial Intelligence (AI) has captivated the attention of venture capitalists. For these investors, AI represents an unmatched opportunity in the software era. Amidst a backdrop of software downsizing and high interest rates there are also TINA (there is no alternative) effects on VC’s. Even in the absence of a meticulously defined regulatory landscape, the potential of AI ensures that risks will indeed be taken, and funds will flow generously into the domain.

The U.S. tech environment thrives, while Chinese startups face the brunt of technological sanctions and limited access to chips. China's tech giants, however, show greater resilience against these sanctions. While Beijing has the means to bolster startups, doing so could disrupt the natural market-driven forces of innovation, a topic we explore in the next newsletter.

Baptists and bootleggers

However, the U.S. start-up ecosystem faces unique challenges in this respect. The concept of 'Baptists and bootleggers' – a coalition of moralists and profit-driven entities pushing for the same regulatory outcome for different reasons – has raised concerns of regulatory incompetence. Such inconsistencies can potentially hamper the growth trajectory of burgeoning tech companies while enriching established ones.